Focus Stacking, Part 2: Artefacts

Now as part 1 of this post has had time to settle, let’s press on. Focus stacking is one of the techniques know as Computational Photography, which include panorama stitch, high-resolution sensor shift, multi-exposure HDR, and light field. All these techniques involve multiple images that are blended in post-processing to a new type of single image or scene representation; opposite to techniques in digital photography that work on a single capture, such as filtering and color adjustments.

These techniques may promt the question about ethics in photography. I have absolutely no problem with time-lapsing out people, for example. Over-saturation and over-sharpening, or turning the colors of a lake in Scotland to those of the waters at Anse La Digue is another matter. And nobody would consider the surrealistic photo-illustrations of David LaChapelle as dishonest work, while this is exactly what Steve McCurry, or his now fired assistant, are accused of producing. In my opinion the problem lies not in the image itself, but its incoherent caption and the false message that the image is supposed to support. But I am digressing.

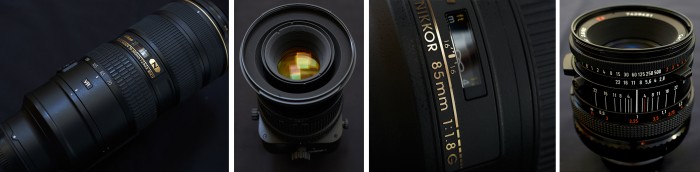

Focus stacking is particularly useful in situations where the scene has a large range of depths in the subject space compared to the shallow depth of field obtained for a given sensor size, focal length, and aperture combination. It will also be a way to make sense of the future FF sensors with 50+ megapixels, where diffraction will counteract the increase of accuaty by stopping down and compromise image quality for depth of field. Moreover, focus stacking can be useful to reduce noise in astrophotography. So there is nothing dishonest here; it is an attempt to optimize the conflicting elements of image quality: noise, diffraction, lens-aberations, and depts-of-field.

There is, of course, the alternative of using tilt-shift lenses, but this is constrained to inclined focal planes (continuous depth maps), for example in landscape photography. The subject of the image below would also qualify.