Now as part 1 of this post has had time to settle, let’s press on. Focus stacking is one of the techniques know as Computational Photography, which include panorama stitch, high-resolution sensor shift, multi-exposure HDR, and light field. All these techniques involve multiple images that are blended in post-processing to a new type of single image or scene representation; opposite to techniques in digital photography that work on a single capture, such as filtering and color adjustments.

These techniques may promt the question about ethics in photography. I have absolutely no problem with time-lapsing out people, for example. Over-saturation and over-sharpening, or turning the colors of a lake in Scotland to those of the waters at Anse La Digue is another matter. And nobody would consider the surrealistic photo-illustrations of David LaChapelle as dishonest work, while this is exactly what Steve McCurry, or his now fired assistant, are accused of producing. In my opinion the problem lies not in the image itself, but its incoherent caption and the false message that the image is supposed to support. But I am digressing.

Focus stacking is particularly useful in situations where the scene has a large range of depths in the subject space compared to the shallow depth of field obtained for a given sensor size, focal length, and aperture combination. It will also be a way to make sense of the future FF sensors with 50+ megapixels, where diffraction will counteract the increase of accuaty by stopping down and compromise image quality for depth of field. Moreover, focus stacking can be useful to reduce noise in astrophotography. So there is nothing dishonest here; it is an attempt to optimize the conflicting elements of image quality: noise, diffraction, lens-aberations, and depts-of-field.

There is, of course, the alternative of using tilt-shift lenses, but this is constrained to inclined focal planes (continuous depth maps), for example in landscape photography. The subject of the image below would also qualify.

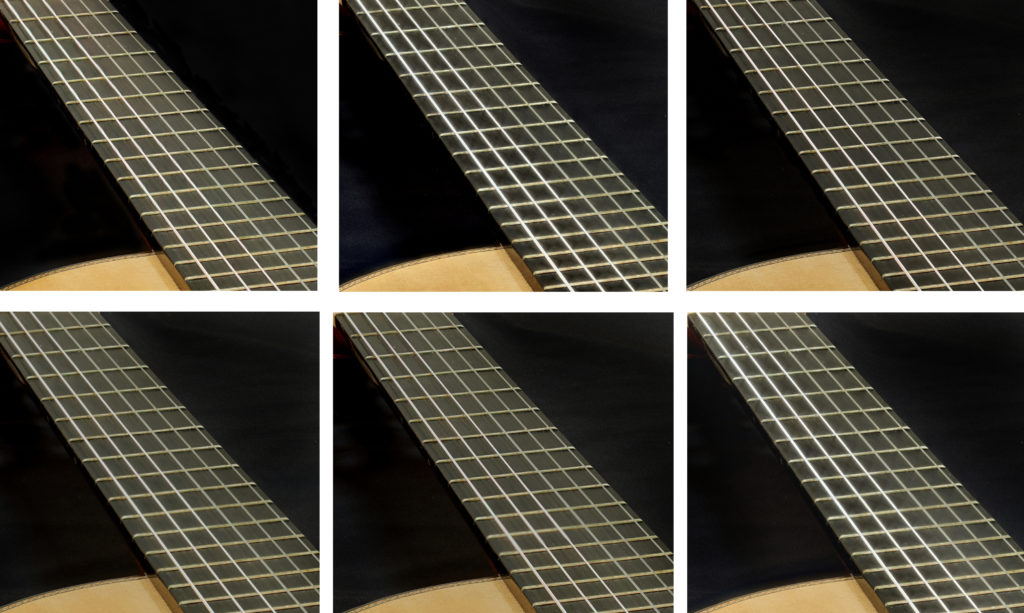

The starting point for focus stacking is a series of images focused at different planes, such that the circle of confusion on different areas in the image plane will be small enough, and the final output will be considered as sharp. The key is to ensure depth-of-field overlaps in adjacent photos of the stack. This way, no out-of-focus bands will be visible in the final composite. Helicon Remote, ControlMyNikon and other software allows you to automatically, and more precisely, step focus by using autofocus lenses or to control motorized focusing rails.

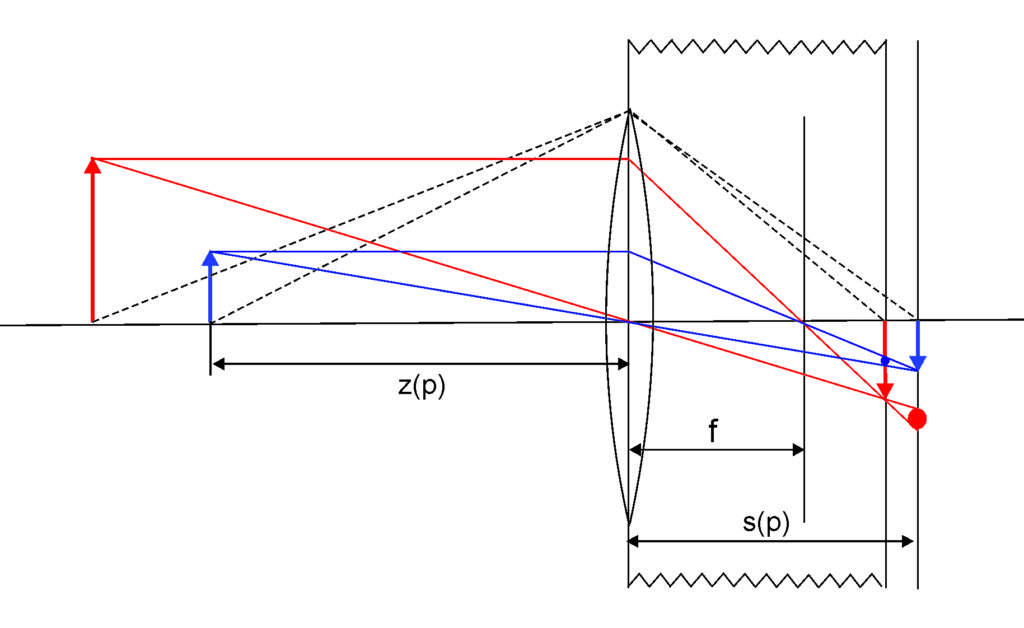

Consider a depth map z(p), where p = (px,py) is the pixel location on the sensor and z(p) is the axial distance between the lens and the subject. The Gaussian lens formula yields the relation between the scene point and its conjugate in the sensor space so that the sensor distance map is given by 1/s(p) = 1/f – 1/z(p) at a given aperture and focal length f. The software then calculates a set of sensor positions with apertures Ni (and corresponding blur radii ri) to approximate a camera setting with the sensor at s*, small aperture N* and small blur radius r*. Manual focusing for stacks requires a bit of expertise to obtain enough overlap in the stack for avoiding out-of-focus banding in the composite image.

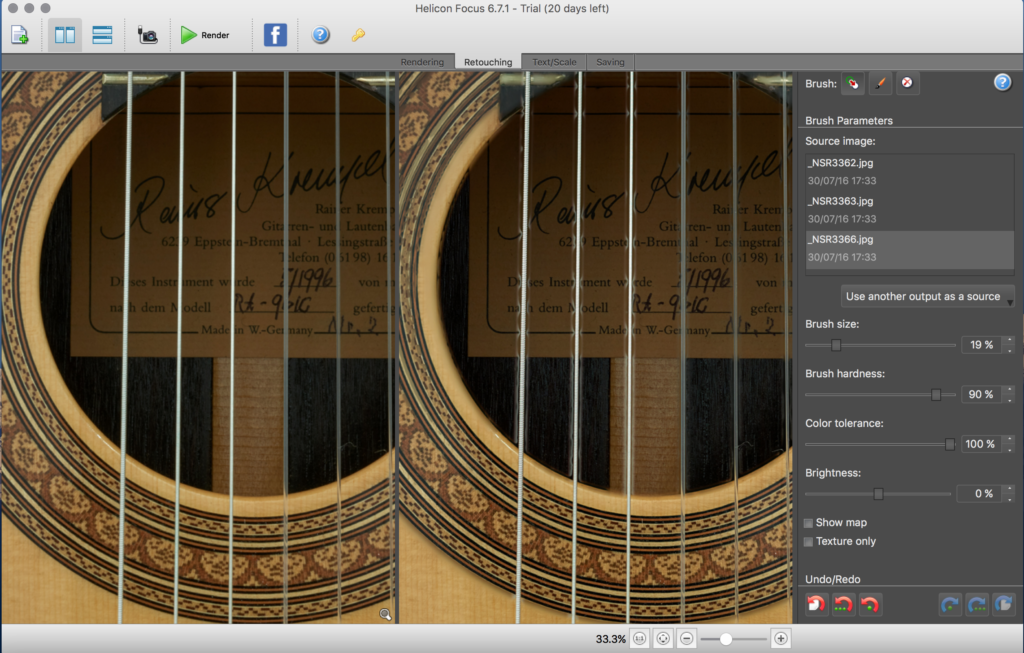

Serene Stacker and Helicon Focus offer depth-map, contrast weight, and the so-called pyramid method, which works better for intersecting objects and crossing lines, for example in tree branches. The depth-map algorithm extracts from each source image the sharpest pixel for a given aperture and focus distance. The adjustable parameters are radius and smoothing. Fine details and thin lines require a small radius, while increasing the radius and smoothing parameter reduces edge halos at the expense of resolution.

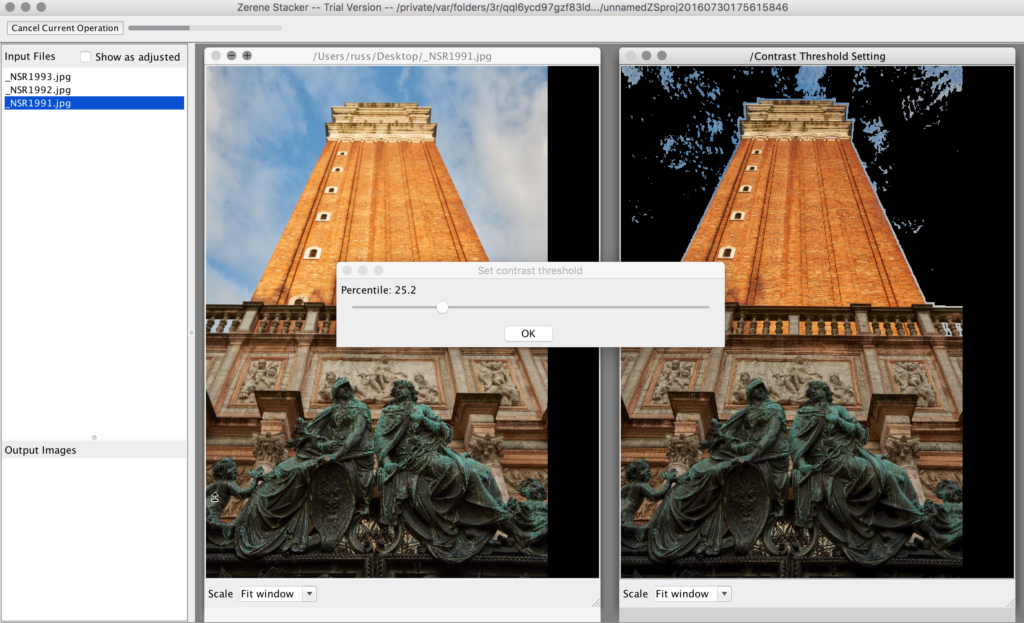

The contrast-weight method in Serene Stacker offers a contrast threshold setting that is particularly useful to avoid artefacts from moving clouds. In the example below the setting is not optimal for illustration purpose.

However, no stacking software can handle edge halos from discontinuities in the depth map, because a single ray from an object will be observed twice in the composite (once point-like, once with a blur circle), which is physically impossible in one single image. This is shown in the following illustration.

Real photographs of opaque objects do not contain halos, because a ray that has been captured by a pixel, cannot be captured by another, even with a nonplanar sensor surface, which is in fact simulated in the stacking process. In a stack, however, pixels at different sensor distances are not captured simultaneously, which may result in double-counted rays.

One can smooth these discontinuities in the sensor-distance map by linearly interpolating between the two closest stack slices, but this results in visible blurriness near depth edges.

A workaround is to record a matrix, i.e., a 2D family of photographs with varying focus as well as varying aperture at either side of the depth edges. Wherever the depth map is flat, a composite of properly focused wide-aperture photograph will be sharper (less diffraction) and less noisy than its narrow-aperture counterpart. But at depth discontinuities one can trade off noise and diffraction against halo formation.

In this case, manual retouching is required with all three tested software packages.

The final example shows a retouched image from a stack of 4 images: cords, fretboard, soundboard, and label, all at f/5.6, with an additional capture at f/14 focused at the soundboard. I also applied some extra sharpening on the f/14 image; click for ultimate pixel-peeping pleasure. SR