The truth is in the print; what looks good on the screen doesn’t necessary hold in print (and vice versa). Having gone through the first set of cartridges and pile of paper, I am glad that I haven’t deleted my original files. In a number of cases I had to return to the RAW files and process them with adjusted settings. Let me explain:

I never bought the concept of normal viewing distance, suggesting that larger prints are viewed from further away (usually between 1.5 to 2 times the diagonal of the print) and therefore require less resolution. While this may be true for a billboard seen from a highway, viewers will move closer to an exhibition print in a gallery or private home, as long as there is more detail to be resolved. For me, a print that looks OK at normal viewing distance but falls apart when moving closer doesn’t qualify. And even though my eyes cannot resolve anymore one minute degree of arc, there is a clearly visible loss of tonality and color separation once you go below 300 ppi, in particular on glossy paper (fine-art media being a bit less demanding).

There is a fair risk of confusion about “dpi” (dots per inch) and “ppi” (pixel per inch), which are often used synonymously for resolution. In fact, pixels are actually made up of sub-pixels; red, blue and green. Regrettably, some manufacturers refer to these sub-pixels as dots to boost the dpi numbers of their screens. If you are looking at an image on a screen, the image ppi setting doesn’t matter because the total resolution of your monitor is fixed. If we take, for example, the MacBook Pro Retina 15”, we have 2880 x 1800 pixels native resolution (at 220 ppi) which allows you to view about half an image from the D810 (7360 x 4912 pixels) at 50%.

Consequently, up or down-sampling images to different ppi numbers only matters for printing. Printers reproduce an image by layering tiny droplets of the inks to create a range of hues by the so-called subtractive color model; and this is what dpi really measures; the droplet density. So if you are printing a 300 ppi image at 600 dpi, each pixel will consist of 4 dots. The Canon printer can place ink droplets with a minimum pitch of 1/2400 inch in horizontal and 1/200 inch in vertical direction.

It is a common wisdom that the native output resolution of the Canon printers corresponds to 300 ppi (it is 360 ppi for the EPSONs) for all but the “Print Quality Highest” setting. However, as already said, the printer needs a large number of droplets to render one image pixel, although it only operates in multiples of 300 dpi. Some of the higher print resolution levels are therefore achieved using proprietary techniques including extra passes and interpolations. A 600 ppi resolution test target created by Bart van der Wolf reveals that the printer is definitely capable of resolving more than 300 ppi, with some aliasing showing above 450 ppi and a considerable loss of contrast at 600 ppi, all of this visible under a 10 times loupe only.

So the question arises whether to send “nonstandard”, i.e., higher than 300 ppi resolution images to the printer driver, to downsample them using Photoshop (bicubic sharpen) or to upsample them to the next higher level of 600 ppi. For the latter a RIP (raster image processor) is often recommended instead of the printer driver. RIPS were once used to raster vector graphics (PostScript) into pixels and are often used for workflow and color reasons. Industrial-level printers don’t come with any driver at all, only with RIP software that accesses all printer channels directly, such as the extra colors and light inks. ImagePrint is one of the most popular of them, and because it has direct print-head control it is claimed that it can handle higher resolution and obtain better shadow detail. However, ImagePrint only drives Epson printers, so I cannot comment any further.

I recently ran a number of tests with an image of amble high-frequency detail and mid-frequency modulation, where the source file was about 450 ppi for the A3+ size. I up and down sampled the image to 300 ppi and 600 ppi (using Photoshop bicubic interpolation; obviosuly at the same print size), and put the different driver settings through their paces. Well, it’s subtle, but it’s also obvious that the battleground isn’t the resolution; it’s how the driver handles the mid-frequency modulation. The 300 ppi image (Print Quality Standard setting) is arguably as harp, maybe even a tad sharper to my eye, but the 600 ppi image at “Print Quality Highest” does have some inherent smoothness of tonality that can’t be ignored. Uprezzing to 600 ppi also reduces some of the dithering artifacts, but this can only be seen under a 10 x loupe. But if you are aiming at an organic, more film-like look, then noise and dithering are your friends, not enemies.

This is also the reason why you can’t see the difference between 8 bit and 16 bit outputs. To test this, I created a 16-bit ramp in Photoshop and saved a second version as 8-bit jpeg. Both files show banding on the screen, while I cannot see any banding on the printed images. But I would not bother about bit-depth compression to save a few seconds on the data transmission to the printer. Any subsequent adjustments in Photoshop, such as exposure or other changes, cause a loss of certain tonal values, and therefore the bit depth must remain as high as possible.

How does all this influence real-world applications? For the 36 MP sensor of the D810 (with 4800 pixels on the short side) the maximum print size is about 16” or 40 cm, or roughly A2 (420 x 594 mm, 16.5 x 23.4”) with a little print margin required by the head (Canon recommends at least 3.4 mm). Two times larger prints (square root of two on the edges), i.e., A1 (594 x 841 mm, 23.4 x 33.1”) already leave something to be desired, unless you apply the inglorious concept of “normal viewing distance”.

This leads us to the issue of upscaling or uprezzing, as it is often called, if you want to print larger. Of course one could start with large-format film and a high-resolution drum scan, easily yielding an equivalent of more than 200 MP albeit with less pixel acuity at 100%. Or, subject permitting, create multi-stitch images using pano-gimbals or setups like this.

For any uprezzing procedure, the caveat is that there is no margin for error. The raw data must be perfect to start with: critical focus, complete suppression of camera shake, and optical quality of the lens. Clearly, an upgrade of the equipment from 36 to 50 MP won’t make it, in particular if the resolution is limited by the lens*.

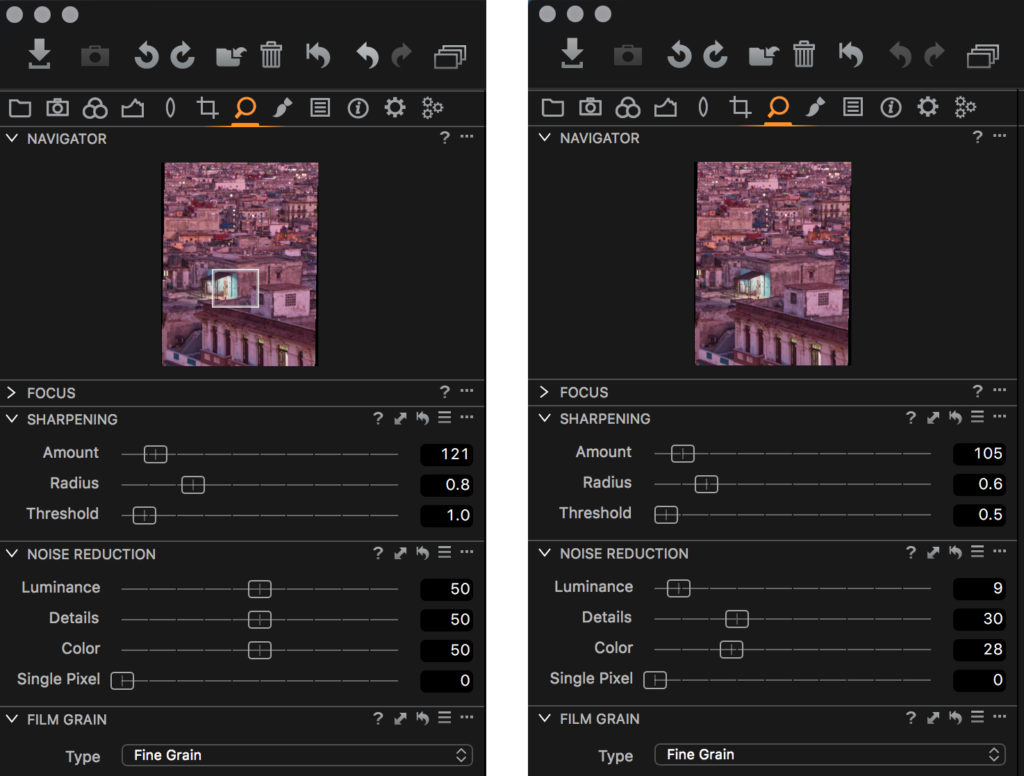

However, in-camera processing (and RAW file “cooking”) applies too much noise reduction and too much sharpening. This is also true for the default setting of my preferred raw converter, CaptureOne. Sharpening and noise reduction go hand in glove because one must avoid the sharpening of noise. On the other hand, I don’t get why camera reviewers are so obsessed about noise, at the same time praising the organic look of film grain. Fine tonal gradations are in the luminance channel and are simply leveled out when the luminance noise reduction is too high. If there is any sharpening artifact (halo) visible at the 200% view in Photoshop it will result in a harsh “digital” look once uprezzed and printed at double size (and even though you may not be able to see these halos with the naked eye).

To come back to the discussion on analogue versus digital capture, the caveat is not in the capture medium but in the image preparation for screen viewing. There is a latent risk of over-sharpening due to pixel peeping at 100%, with the risk of loosing tonality that is much harder to judge on the screen with its reduced gamut. It’s not that the best looking version of an image on your screen will make the best print. Soft proofing has a part to play in this, but a relatively minor one compared to test prints. And this is the reason for my need to reprocess the original RAW files (and scans) for printing.

Running a test with uprezzed files from CaptureOne, Photoshop, and Perfect Resize by ON1 (former Genuine Fractals) I have to give it to the ON1 results, i.e., appearance of sharpness while suppressing halos and other artifacts. ON1 also has features for gallery wrap (extended margins for canvas on wooden stretcher bars) and tiling (multi-board mosaics). But the differences in achieved image quality are very subtle.

SR

* But oversampling with the high-resolution sensor shift would. The 80 MP images from the Olympus OM-D yield very little in terms of resolution (the limiting factor being the resolving power of the lens) but result in a smoother, more organic look of the final image printed at the same output size. On an equal footing, an 80 MP full frame camera would have its merits, although I wouldn’t expect the ultimate pixel acuity.

Dirk

27 Aug 2017Thanks Stephan – very interesting entry!

Many greetings from your readers in Canada.

Stephan Russenschuck

27 Aug 2017Thanks Dirk, I guess you went south to photograph the eclipse? Stephan